Introduction

In the world of self-hosted applications and microservices, structured logging is essential for debugging, monitoring, and long-term storage. JSON logs, like those generated by Authelia, are machine-readable but can become difficult to manage as they grow over time. Instead of relying on flat files or rotating logs manually, converting logs into a searchable, structured database format is a better long-term solution. The sandbox99.cc blog platform hosts multiple containerized web applications, including VS Code Server, Webtop (a Docker-based Linux environment), and Portainer. All these services are secured behind Authelia’s two-factor authentication system for enhanced protection.

In this guide, we’ll show how to build a lightweight Python script that monitors a JSON-based authelia.log file and stores unique entries into a SQLite database. This approach is ideal for small to mid-sized setups where running a full logging stack like ELK or Loki is overkill.

Why Store Logs in a Database?

- 🔍 Searchability: Easily query logs using SQL.

- 📅 Persistence: Avoid accidental deletion or loss from log rotation.

- 🔢 Structure: Enforce consistent fields such as timestamp, level, username, and IP.

- 📊 Analysis Ready: Export, analyze, or visualize logs more easily.

Log Format Assumption

This guide assumes that your authelia.log file consists of newline-delimited JSON entries, each containing fields like:

{"error":"read tcp 10.20.107.206:9091-\u003e10.20.107.120:59396: i/o timeout","level":"error","method":"GET","msg":"Request timeout occurred while handling request from client.","path":"/","remote_ip":"10.20.107.120","status_code":408,"time":"2025-05-08T23:28:29+08:00"}

{"error":"read tcp 10.20.107.206:9091-\u003e10.20.107.120:59364: i/o timeout","level":"error","method":"GET","msg":"Request timeout occurred while handling request from client.","path":"/","remote_ip":"10.20.107.120","status_code":408,"time":"2025-05-08T23:28:29+08:00"}

{"error":"user not found","level":"error","method":"POST","msg":"Error occurred getting details for user with username input 'superboi' which usually indicates they do not exist","path":"/api/firstfactor","remote_ip":"103.108.174.36","time":"2025-05-11T00:14:27+08:00"}

{"error":"user not found","level":"error","method":"POST","msg":"Error occurred getting details for user with username input 'superboi' which usually indicates they do not exist","path":"/api/firstfactor","remote_ip":"103.108.174.36","time":"2025-05-11T00:14:34+08:00"}

{"error":"user not found","level":"error","method":"POST","msg":"Error occurred getting details for user with username input 'aquaman' which usually indicates they do not exist","path":"/api/firstfactor","remote_ip":"103.108.174.36","time":"2025-05-11T00:14:45+08:00"}

{"error":"user not found","level":"error","method":"POST","msg":"Error occurred getting details for user with username input 'aquaman' which usually indicates they do not exist","path":"/api/firstfactor","remote_ip":"103.108.174.36","time":"2025-05-11T00:14:51+08:00"}

Project Structure

log_to_sqlite/ ├── authelia_log_monitor.log ├── authelia_logs.db └── authelia_log_monitor.py

The Python Script

Our script does the following:

- Initializes the SQLite database with the following schema:

- Every minute, reads the log file.

- Parses each JSON line.

- Checks for duplicates based on timestamp and message.

- Inserts only new entries. (No duplicate entry)

- Logs activity into

authelia_log_monitor.log(but only when changes occur).

#!/usr/bin/env python3

# Author: Jose Mendez

# Date: 05/10/2025

# Blog site: sandbox99.cc

# Description: This script monitors the Authelia log file for new entries,

# inserts them into an SQLite database, and avoids duplicates.

# This script is designed to run in a loop, checking the log file every minute.

# It requires Python 3 and the sqlite3 module.

# Usage: nohup sudo python3 authelia_log_monitor.py &

# --- Required Libraries ---

# pip install sqlite3

# --- Note ---

# This script assumes that the Authelia log file is in JSON format and

# that each line is a valid JSON object. Adjust the parsing logic if necessary.

# --- Import Libraries ---

import sqlite3

import time

import json

import os

import logging

from datetime import datetime

# --- Configuration ---

LOG_FILE_PATH = "/PATH_OF_YOUR_AUTHELIA/authelia.log"

DB_FILE_PATH = "/authelia_logs.db"

MONITOR_LOG_PATH = "/authelia_log_monitor.log"

# --- Logging Setup ---

logging.basicConfig(

filename=MONITOR_LOG_PATH,

level=logging.INFO,

format="%(asctime)s [%(levelname)s] %(message)s"

)

# --- Initialize the SQLite Database ---

def initialize_db():

try:

conn = sqlite3.connect(DB_FILE_PATH)

cursor = conn.cursor()

cursor.execute('''

CREATE TABLE IF NOT EXISTS logs (

id INTEGER PRIMARY KEY AUTOINCREMENT,

timestamp TEXT NOT NULL,

level TEXT,

remote_ip TEXT,

msg TEXT,

raw_json TEXT,

UNIQUE(timestamp, msg)

)

''')

conn.commit()

conn.close()

logging.info("Database initialized successfully.")

except Exception as e:

logging.error(f"Failed to initialize database: {e}")

# --- Fetch Existing Logs ---

def fetch_existing_logs():

try:

conn = sqlite3.connect(DB_FILE_PATH)

cursor = conn.cursor()

cursor.execute("SELECT timestamp, msg FROM logs")

existing = set(cursor.fetchall())

conn.close()

return existing

except Exception as e:

logging.error(f"Error fetching existing logs: {e}")

return set()

# --- Read Logs from File ---

def read_log_file():

if not os.path.exists(LOG_FILE_PATH):

logging.warning("Log file not found.")

return []

try:

with open(LOG_FILE_PATH, 'r') as file:

lines = file.readlines()

return [json.loads(line.strip()) for line in lines if line.strip()]

except Exception as e:

logging.error(f"Error reading log file: {e}")

return []

# --- Insert Unique Logs ---

def insert_new_logs(logs, existing_logs):

try:

conn = sqlite3.connect(DB_FILE_PATH)

cursor = conn.cursor()

new_count = 0

for log in logs:

timestamp = log.get("time")

msg = log.get("msg")

level = log.get("level")

remote_ip = log.get("remote_ip")

# Set placeholders if still None

remote_ip = remote_ip if remote_ip else "-"

if (timestamp, msg) not in existing_logs:

try:

cursor.execute('''

INSERT INTO logs (timestamp, level, remote_ip, msg, raw_json)

VALUES (?, ?, ?, ?, ?)

''', (timestamp, level, remote_ip, msg, json.dumps(log)))

new_count += 1

except sqlite3.IntegrityError:

continue # Skip duplicates

conn.commit()

conn.close()

if new_count > 0:

logging.info(f"Inserted {new_count} new log(s).")

except Exception as e:

logging.error(f"Error inserting logs: {e}")

# --- Main Monitoring Loop ---

def monitor_log_file():

initialize_db()

while True:

logs = read_log_file()

existing_logs = fetch_existing_logs()

insert_new_logs(logs, existing_logs)

time.sleep(60)

if __name__ == "__main__":

monitor_log_file()

Running the Script

You can run the script in the background with:

nohup sudo python3 authelia_log_monitor.py &

This way, it continuously monitors the log file every minute without requiring Docker, cron, or external tools.

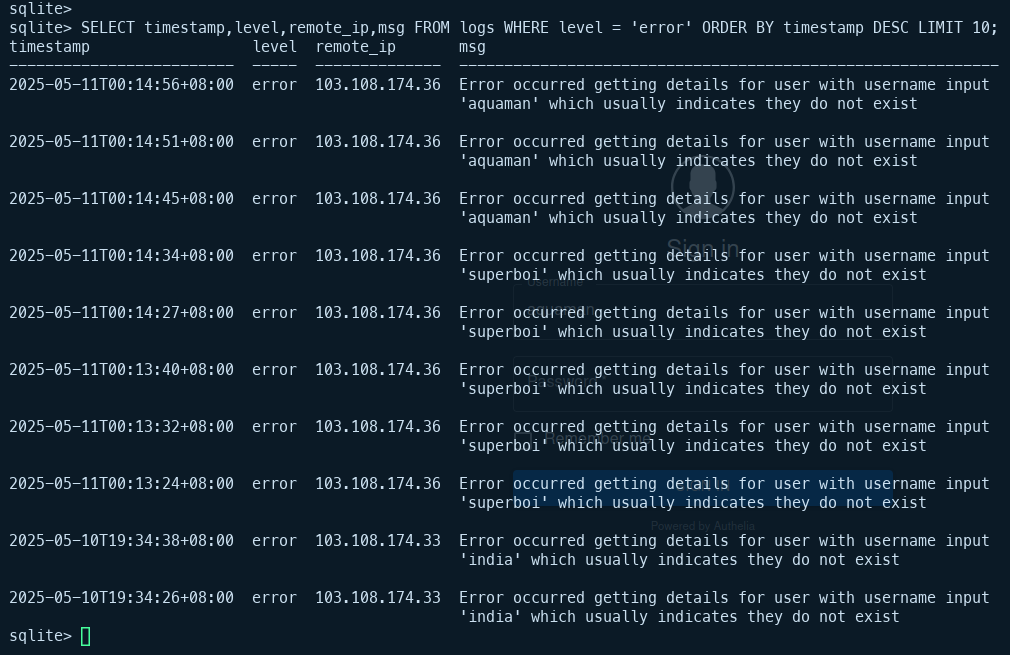

Querying the Logs

Once logs are inside SQLite, querying becomes easy:

sqlite3 authelia_logs.db sqlite> .headers on sqlite> .mode column sqlite> SELECT timestamp,level,remote_ip,msg FROM logs WHERE level = 'error' ORDER BY timestamp DESC LIMIT 10;

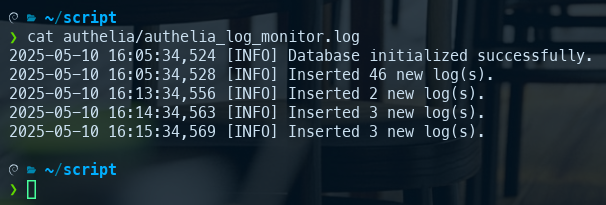

Checking authelia_log_monitor.log

cat authelia_log_monitor.log

Final Thoughts

Managing and analyzing logs doesn’t always require complex infrastructure. With just Python and SQLite, you can automate the process of capturing structured logs and storing them in a searchable format. This method provides a reliable, low-overhead way to preserve important security and access events — especially from tools like Authelia that emit well-structured JSON.

Whether you’re running a small homelab or a lightweight Docker-based authentication gateway, storing logs in SQLite can offer a good balance of simplicity, portability, and efficiency. Consider extending this script to support filtering, rotation, or even integration with web dashboards in future iterations.