Introduction

Have you ever wondered if your web application has hidden corners or forgotten pathways that could be exploited? In the world of cybersecurity, understanding every inch of your application’s surface is paramount. This is where web spidering comes into play, and OWASP ZAP, the powerful open-source web application security scanner, offers robust tools to do just that.

In this blog post, we’ll dive deep into OWASP ZAP’s spidering capabilities. You’ll learn what web spiders are, how ZAP’s two distinct spiders work, how to effectively use them, and advanced techniques to ensure you uncover every accessible resource of your web application.

Understanding OWASP ZAP’s Spiders

At its core, a web spider (often referred to as a web crawler) is an automated program meticulously designed to browse the World Wide Web. Its fundamental purpose is to discover and index content by systematically following links. In the critical context of security testing, a spider’s role is indispensable: it methodically identifies all URLs, forms, and other accessible resources within a target web application, thereby constructing a comprehensive map that forms the bedrock for subsequent security assessments.

OWASP ZAP empowers you with two distinct spidering engines, each uniquely tailored to address the nuances of different types of web applications:

1. The Traditional Spider (HTTP Spider)

The Traditional Spider is ZAP’s classic and foundational crawling engine. It operates by:

- Following Links: It diligently parses the raw HTML, CSS, and JavaScript content of a page, extracting all

hrefattributes,srcattributes, form actions, and any other potential links it encounters. - Making HTTP Requests: For every newly discovered link, it initiates a standard HTTP request to fetch the corresponding content, and then it repeats the parsing process on the newly retrieved data.

Strengths:

- Speed: This spider is generally very fast and efficient, as it does not incur the overhead of rendering pages or executing complex JavaScript.

- Static Content: It is exceptionally effective for applications primarily constructed with static HTML and those that rely heavily on server-side rendering.

Limitations:

- JavaScript-Heavy Applications: It struggles significantly with modern applications that depend extensively on JavaScript to dynamically load content or manage navigation. If a link or content is generated by JavaScript after the initial page load, the Traditional Spider might unfortunately miss it entirely.

2. The AJAX Spider

The AJAX Spider is OWASP ZAP’s sophisticated response to the inherent complexities of modern web applications, particularly Single Page Applications (SPAs) and those that make extensive use of Asynchronous JavaScript and XML (AJAX) to fetch and display content. It functions by:

- Browser Rendering: It intelligently launches a headless browser instance (such as Chrome or Firefox) to actually render the web page in a real browser environment.

- JavaScript Execution: Within this browser, all JavaScript on the page is executed, and the spider simulates various user interactions to discover dynamically generated links, forms, and other resources that appear only after script execution.

- Event Simulation: It can simulate a wide array of Document Object Model (DOM) events (like clicks, hovers, form submissions) to uncover even more content and functionality.

Strengths:

- Modern Applications: It is absolutely essential for accurately mapping and exploring JavaScript-rich applications where content is loaded dynamically and interactions are client-side driven.

- Comprehensive Discovery: It is vastly superior at finding links and functionalities that are only revealed after the client-side scripts have fully executed.

Limitations:

- Slower: Due to the significant overhead involved in launching, managing, and interacting with a full browser instance, it is considerably slower than the Traditional Spider.

- Resource-Intensive: It consumes a notably higher amount of CPU and memory resources, which should be considered for large-scale scans.

Using the Spiders in OWASP ZAP

Before you initiate any spidering activity, ensure you have OWASP ZAP properly installed on your system and that your target web application is fully accessible (whether it’s running locally on your machine or hosted on a network).

1. Launching the Traditional Spider

There are a couple of straightforward ways to kick off the Traditional Spider:

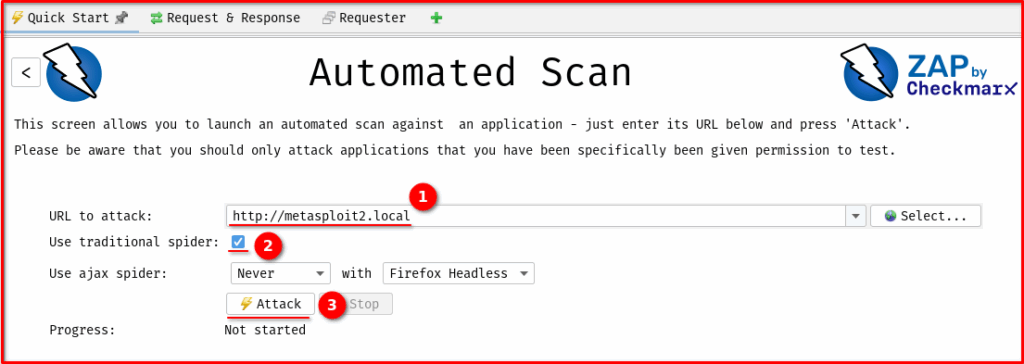

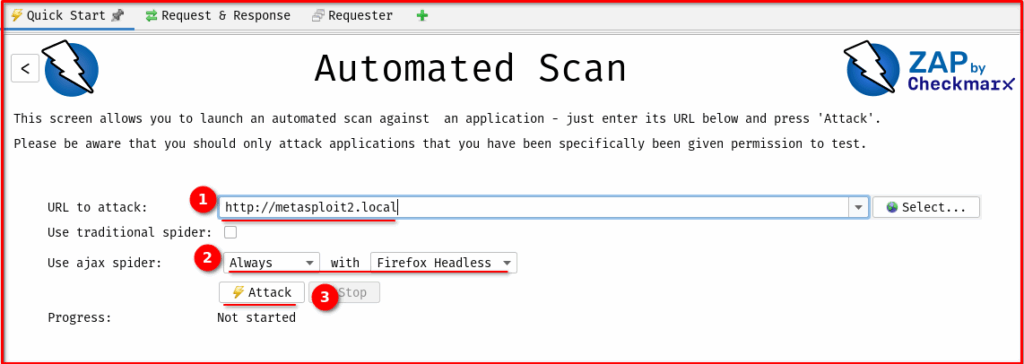

a. Via “Automated Scan” (Quick Start):

- On ZAP’s main toolbar, locate and click the “Automated Scan” button (typically represented by a green arrow icon).

- 1. In the provided input field, enter the full URL of your target application.

- 2. Ensure that the “Traditional Spider” checkbox is selected.

- 3. Finally, click the “Attack” button. This is the quickest and simplest method for an initial, broad-scope scan.

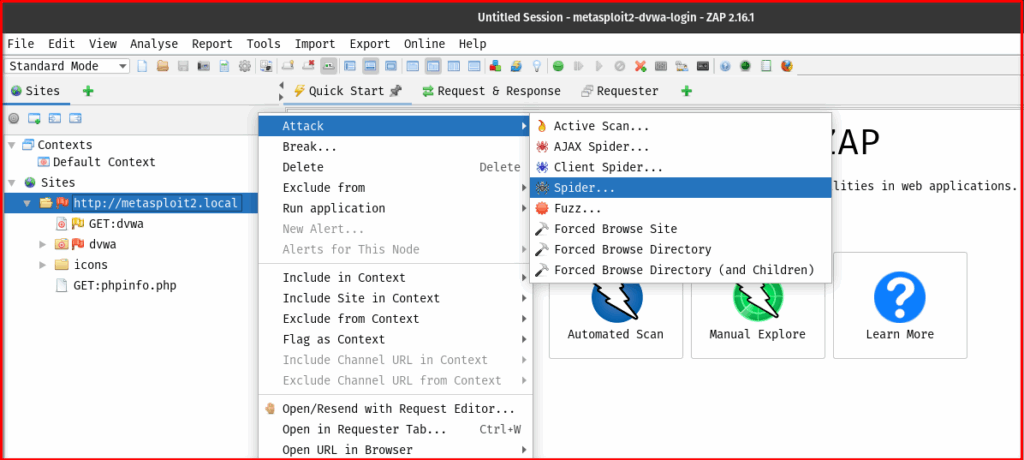

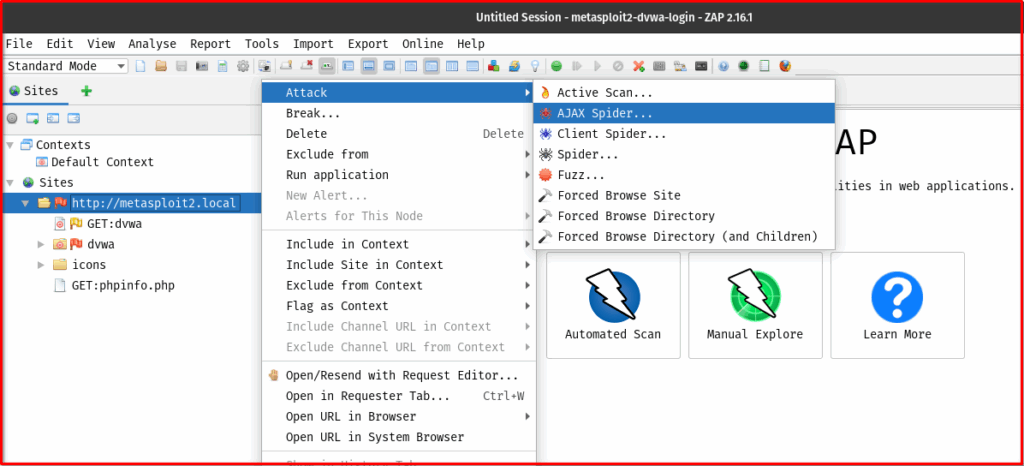

b. Manual Launch (Right-click on Site/URL):

- In the “Sites” tree located on the left-hand pane of ZAP’s interface, navigate to your target application’s root URL or a specific directory/page you wish to spider.

- Right-click on the desired URL.

- From the context menu, select “Attack” > “Spider…”

- This manual method provides you with more granular control over the spidering options before the scan begins.

c. Key Options/Settings to Consider:

When manually launching the Traditional Spider, a dialog box will appear with various configurable settings:

- Scope: This is critically important! You must define whether the spider should strictly stay “In Scope” (meaning it will only spider within the boundaries of your defined context) or if it’s allowed to venture “Out of Scope” (which is generally not recommended for targeted testing).

- Max depth: This setting limits how many levels deep the spider will traverse from the starting URL. A lower depth can speed up the scan but might miss deeper content.

- Number of threads: This controls how many concurrent HTTP connections ZAP will make to the target. Increasing threads can accelerate the process but might potentially overwhelm or be detected by the target server.

- Forms to submit: This determines whether ZAP should attempt to automatically submit any HTML forms it discovers during the crawl.

2. Launching the AJAX Spider

The AJAX Spider is also easy to launch, following a similar pattern:

a. Via “Automated Scan” (if applicable):

- Similar to the Traditional Spider, use the “Automated Scan” button from the Quick Start tab.

- 1. Enter the target URL.

- 2. Make sure the “AJAX Spider” drop down selection set always.

- 3. Click “Attack.”

b. Manual Launch (Right-click on Site/URL):

- In the “Sites” tree, right-click on your target URL.

- Select “Attack” > “AJAX Spider…”

c. Key Options/Settings to Consider:

The AJAX Spider dialog offers specific settings tailored for browser-based crawling:

- Browser selection: Choose which headless browser ZAP should utilize for rendering (e.g., Chrome, Firefox). It’s crucial to ensure the necessary browser drivers are installed and correctly configured on your system.

- Max crawl depth/duration: These settings limit how deep the spider will go into the application or how long it will run, which is vital for managing resources and preventing infinite crawls.

- Event wait time: This specifies how long the spider waits after simulating an event (like a click) before it checks for new DOM changes or dynamically loaded content.

- Number of browser windows: This controls how many browser instances ZAP will run concurrently. More windows can speed up the process but will consume more system resources.

Monitoring the Spidering Process

As the spiders are actively running, OWASP ZAP provides several intuitive windows to monitor their progress and view their real-time results:

- “Sites” tree: This hierarchical view, located on the left pane of ZAP’s interface, will dynamically populate with all the URLs, files, and resources discovered by both spiders. It serves as your real-time, evolving map of the application’s structure.

- “History” tab: Every single HTTP request and response made by the spiders (along with any other ZAP activity) is meticulously logged here. This allows you to inspect the raw traffic, identify patterns, and debug issues.

- “Alerts” tab: If the spider encounters any immediate issues during its crawl (such as broken links, or certain security headers being absent), ZAP might raise passive alerts here. These are initial findings that warrant further investigation.

- “Output” window: This console-like window, usually at the bottom of the interface, provides verbose messages about the spider’s progress, any errors encountered, and informational notes. It’s useful for understanding what the spider is doing behind the scenes.

Advanced Spidering Techniques & Tips

To truly maximize the effectiveness and precision of OWASP ZAP’s spiders, consider incorporating these advanced techniques into your workflow:

A. Defining Scope

Scope is arguably the most critical configuration for any ZAP scan. Properly defining it ensures that the spider focuses exclusively on your intended target application and prevents it from inadvertently wandering off to external, unrelated websites.

- Include/Exclude from context: In the “Sites” tree, you can easily right-click on specific URLs or directories and choose to “Include in Context” or “Exclude from Context.” This allows you to define precisely what ZAP should and should not spider.

- Regex for fine-grained control: For more complex applications or intricate scoping requirements, you can leverage regular expressions within the “Contexts” section (accessible via File > New Context… or by right-clicking a site and selecting “Include in Context” > “New Context…”). This provides highly granular control over which URLs are considered in or out of scope.

B. Authentication

Spidering authenticated areas of an application is absolutely crucial for comprehensive security testing, as many vulnerabilities reside behind login walls. ZAP offers several robust ways to handle this:

- Manual Authentication (Browser): If you are primarily utilizing the AJAX Spider, you can often log in manually through the browser instance that ZAP launches. Once logged in, the spider will then proceed to crawl the application within that authenticated session.

- Scripted Authentication: For more automated, repeatable, or complex authentication flows, you can write authentication scripts (e.g., using ZAP’s built-in scripting engine, often in JavaScript or Python). These scripts handle the entire login process programmatically before the spidering begins.

- Forced User Mode: Once you have successfully authenticated a session within ZAP, you can set ZAP to “Forced User Mode.” When this mode is active, ZAP ensures that all subsequent requests, including those made by the spiders, are automatically made using the established authenticated session.

C. Handling Anti-CSRF Tokens & Dynamic Parameters

Modern web applications frequently employ anti-CSRF (Cross-Site Request Forgery) tokens and various dynamic parameters to enhance security and prevent automated attacks. OWASP ZAP is intelligently designed to manage these complexities:

- Anti-CSRF Tokens: ZAP can automatically identify and correctly handle common anti-CSRF tokens. This ensures that forms are submitted accurately and that the spider doesn’t get blocked by invalid tokens during its traversal.

- Dynamic Parameters: It can also learn and manage dynamic parameters embedded within URLs and form fields. This capability allows the spider to successfully explore paths and functionalities that would otherwise be inaccessible due to constantly changing values.

D. Spidering APIs (REST/SOAP)

OWASP ZAP is not limited to traditional web pages; it is also an excellent tool for mapping and testing Application Programming Interfaces (APIs), whether they are RESTful or SOAP-based:

- Importing OpenAPI/Swagger/WSDL definitions: ZAP provides functionality to import API definitions (such as OpenAPI/Swagger JSON/YAML files or WSDL files for SOAP services).

- ZAP’s ability to parse and spider from these definitions: Once these definitions are imported, ZAP can parse them to understand the various API endpoints, their expected parameters, and the HTTP methods they support. It can then intelligently spider these definitions to discover potential vulnerabilities within your API landscape. This feature is incredibly valuable for testing modern microservice architectures.

E. Performance Considerations

Spidering, particularly with the AJAX Spider, can be resource-intensive. Keeping these performance tips in mind will help you conduct more efficient scans:

- Adjusting threads: For the Traditional Spider, experiment with the “Number of threads” setting. Find a balance that provides good speed without overloading or causing instability on the target server.

- Limiting depth/time: For both spiders, setting a maximum crawl depth or a maximum duration for the scan can prevent infinite loops on complex applications and help manage your system’s resource consumption.

- Resource usage for AJAX spider: Be acutely mindful that running multiple AJAX Spider instances concurrently or attempting to crawl very large, complex single-page applications can consume significant CPU and RAM. Monitor your system resources during AJAX spidering.

Analyzing Spider Results

Once the spidering process has completed, the real work of analysis begins. The data gathered by the spiders provides a critical foundation for your security assessment:

A. Reviewing the “Sites” Tree

The “Sites” tree is your primary output and the most direct representation of what the spiders discovered. It provides a comprehensive, hierarchical list of every URL, file, and directory that ZAP’s spiders found. Take your time to thoroughly explore this tree to gain a deep understanding of your application’s actual structure and its exposed attack surface.

B. Identifying Hidden/Unlinked Resources

One of the most valuable outcomes of a thorough spidering process is the identification of resources that are not directly linked from the main navigation or typical user flows, but are still accessible. These could include forgotten administration pages, deprecated test endpoints, sensitive configuration files, or other resources that were never intended to be publicly exposed. Discovering these can reveal significant security risks.

C. Preparing for Active Scanning

The meticulously gathered output of the spidering phase forms the fundamental basis for any subsequent active vulnerability scans. By knowing all accessible URLs, their associated parameters, and the methods they support, OWASP ZAP can then launch highly targeted and effective attacks to uncover common vulnerabilities such as SQL injection, Cross-Site Scripting (XSS), broken authentication, and many others. A thorough and well-executed spidering phase directly translates to a much more effective and comprehensive active scan.

Final Thoughts

OWASP ZAP’s spider features are truly indispensable tools in the arsenal of any web application security tester or developer committed to building secure applications. By intelligently leveraging both the rapid Traditional Spider and the dynamic, browser-based AJAX Spider, meticulously defining your scan scope, and understanding how to handle complex authentication mechanisms and modern API structures, you can gain an unparalleled and accurate understanding of your application’s true attack surface.

Don’t leave any stone unturned in your pursuit of web application security! We strongly encourage you to start experimenting with OWASP ZAP’s powerful spidering capabilities today. Uncover every accessible resource, identify potential hidden pathways, and significantly strengthen the overall security posture of your web applications. Happy hunting!